Avro Message with Kafka Connector Example¶

Given below is a sample scenario that demonstrates how to send Apache Avro messages to a Kafka broker via Kafka topics. The publishMessages operation allows you to publish messages to the Kafka brokers via Kafka topics.

What you'll build¶

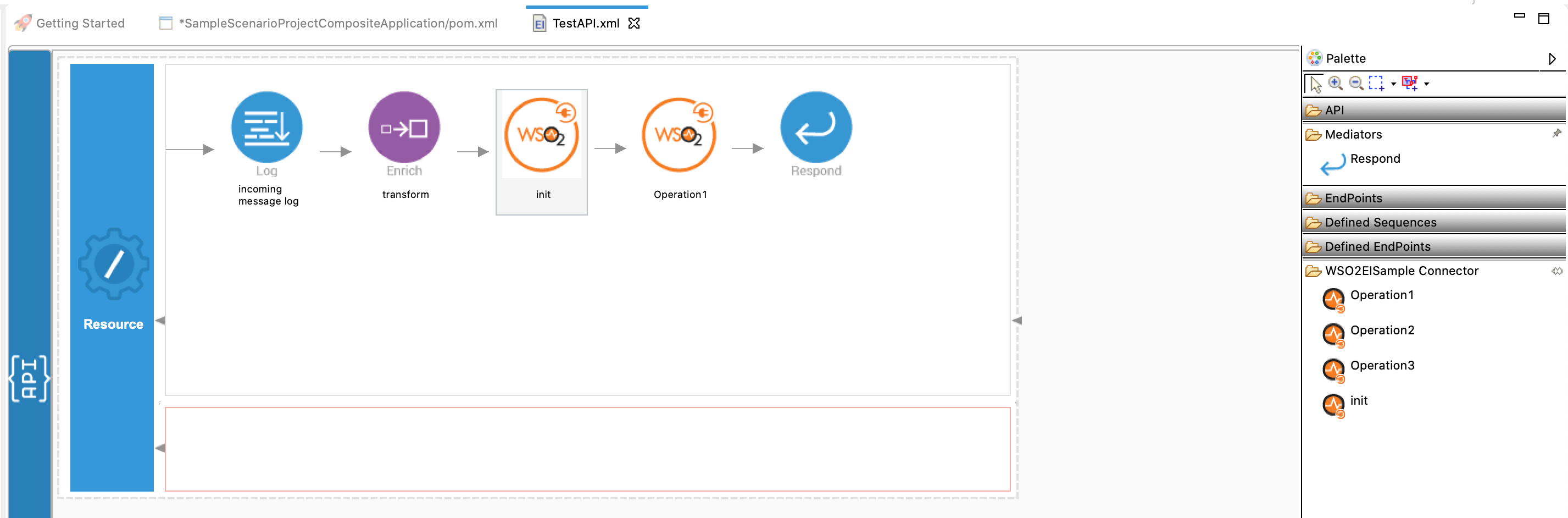

Given below is a sample API that illustrates how you can connect to a Kafka broker with the init operation and then use the publishMessages operation to publish messages via the topic. It exposes Kafka functionalities as a RESTful service. Users can invoke the API using HTTP/HTTPS with the required information.

API has the /publishMessages context. It publishes messages via the topic to the Kafka server.

Set up Kafka¶

Before you begin, set up Kafka by following the instructions in Setting up Kafka.

Configure the connector in WSO2 Integration Studio¶

Follow these steps to set up the Integration Project and the Connector Exporter Project.

-

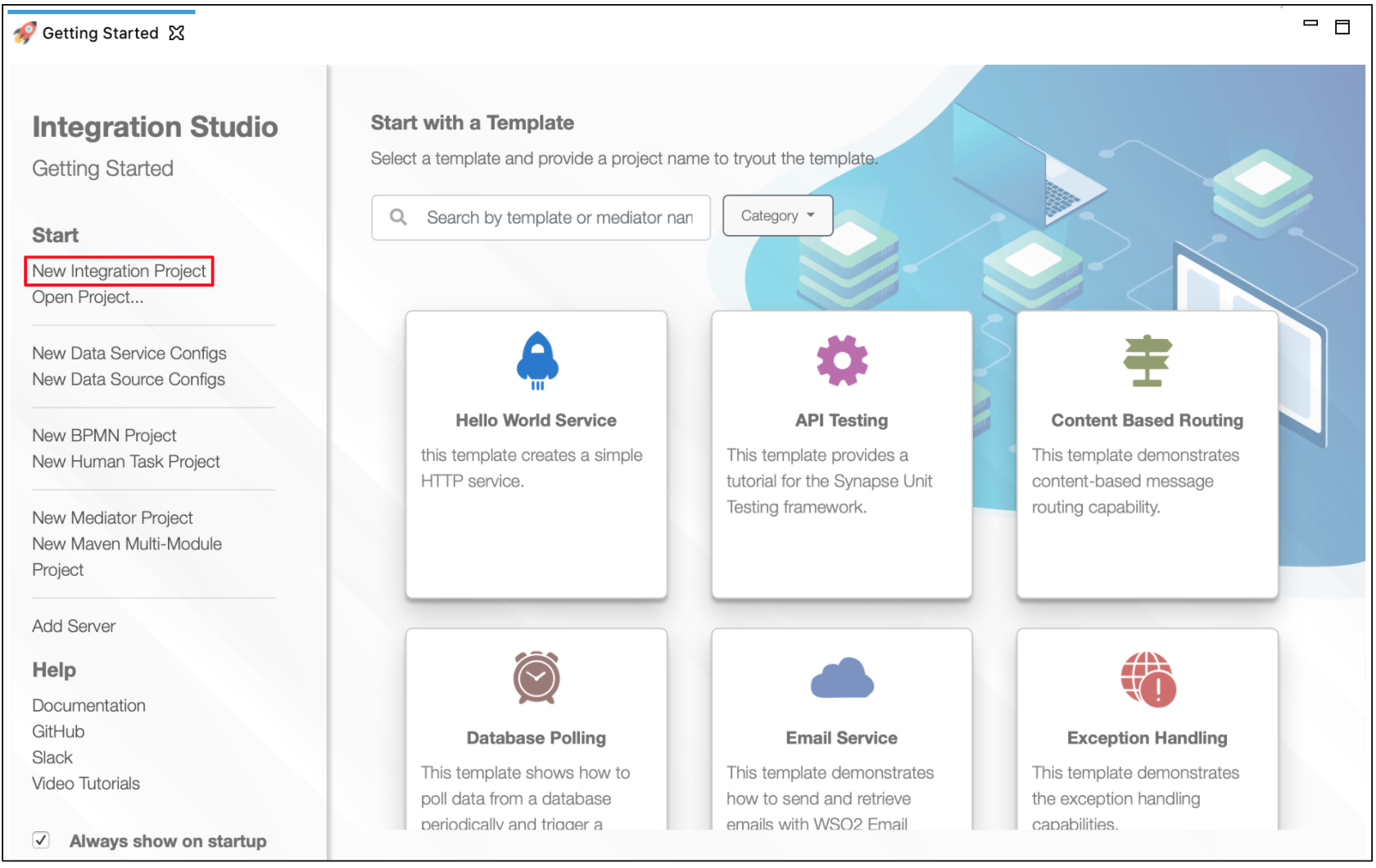

Open WSO2 Integration Studio and create an Integration Project.

-

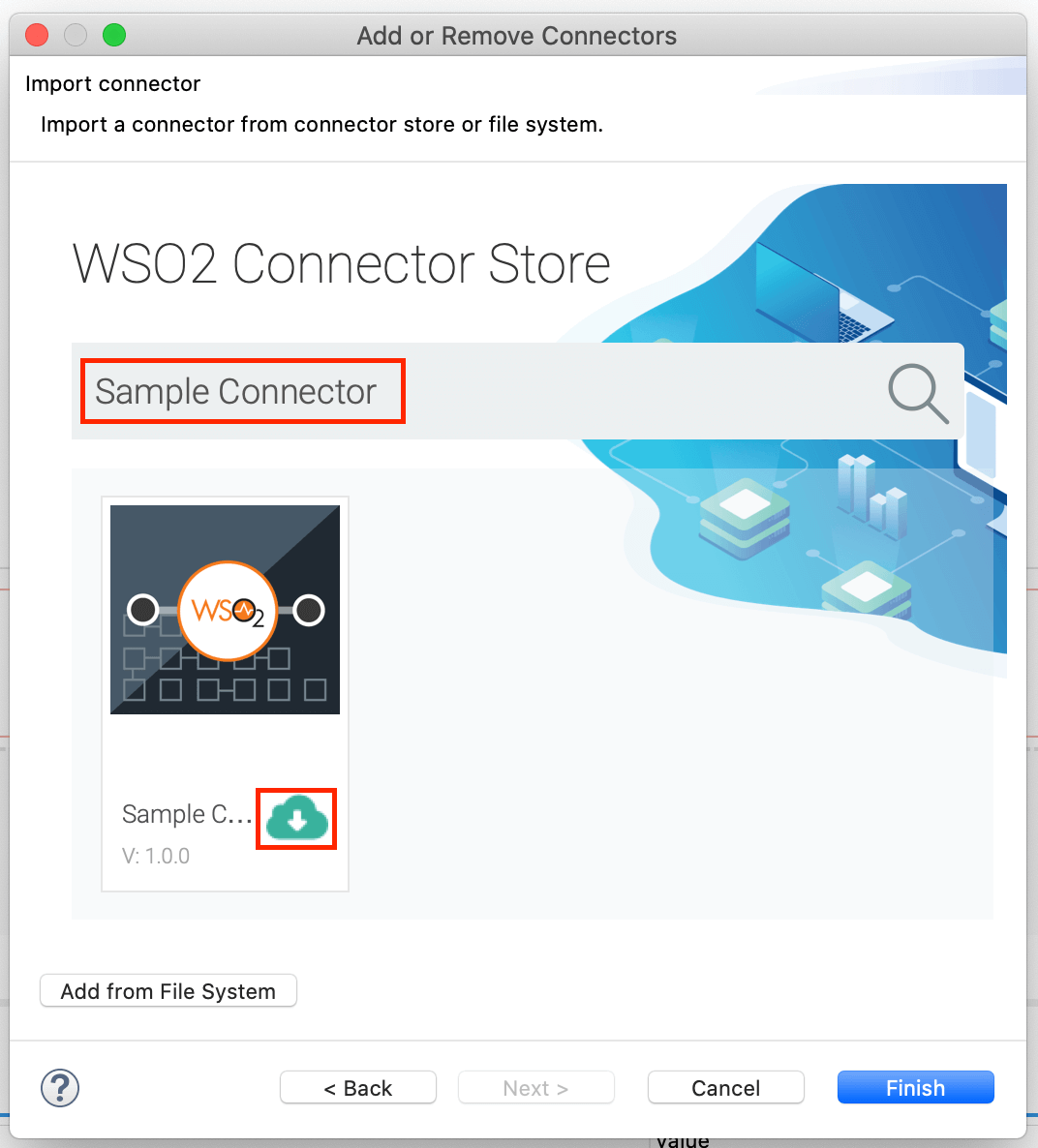

Right-click the project that you created and click on Add or Remove Connector -> Add Connector. You will get directed to the WSO2 Connector Store.

-

Search for the specific connector required for your integration scenario and download it to the workspace.

-

Click Finish, and your Integration Project is ready. The downloaded connector is displayed on the side palette with its operations.

-

You can drag and drop the operations to the design canvas and build your integration logic.

-

Right click on the created Integration Project and select New -> Rest API to create the REST API.

-

Right-click the created Integration Project and select New -> Rest API to create the REST API.

-

Specify the API name as

KafkaTransportand API context as/publishMessages. You can go to the source view of the XML configuration file of the API and copy the following configuration (source view).

Now we can export the imported connector and the API into a single CAR application. The CAR application needs to be deployed during server runtime.<?xml version="1.0" encoding="UTF-8"?> <api context="/publishMessages" name="KafkaTransport" xmlns="http://ws.apache.org/ns/synapse"> <resource methods="POST"> <inSequence> <property name="valueSchema" expression="json-eval($.test)" scope="default" type="STRING"/> <property name="value" expression="json-eval($.value)" scope="default" type="STRING"/> <property name="key" expression="json-eval($.key)" scope="default" type="STRING"/> <property name="topic" expression="json-eval($.topic)" scope="default" type="STRING"/> <kafkaTransport.init> <name>Sample_Kafka</name> <bootstrapServers>localhost:9092</bootstrapServers> <keySerializerClass>io.confluent.kafka.serializers.KafkaAvroSerializer</keySerializerClass> <valueSerializerClass>io.confluent.kafka.serializers.KafkaAvroSerializer</valueSerializerClass> <schemaRegistryUrl>http://localhost:8081</schemaRegistryUrl> <maxPoolSize>100</maxPoolSize> </kafkaTransport.init> <kafkaTransport.publishMessages> <topic>{$ctx:topic}</topic> <key>{$ctx:key}</key> <value>{$ctx:value}</value> <valueSchema>{$ctx:valueSchema}</valueSchema> </kafkaTransport.publishMessages> </inSequence> <outSequence/> <faultSequence/> </resource> </api>

Exporting Integration Logic as a CApp¶

CApp (Carbon Application) is the deployable artifact on the integration runtime. Let us see how we can export integration logic we developed into a CApp along with the connector.

Creating Connector Exporter Project¶

To bundle a Connector into a CApp, a Connector Exporter Project is required.

-

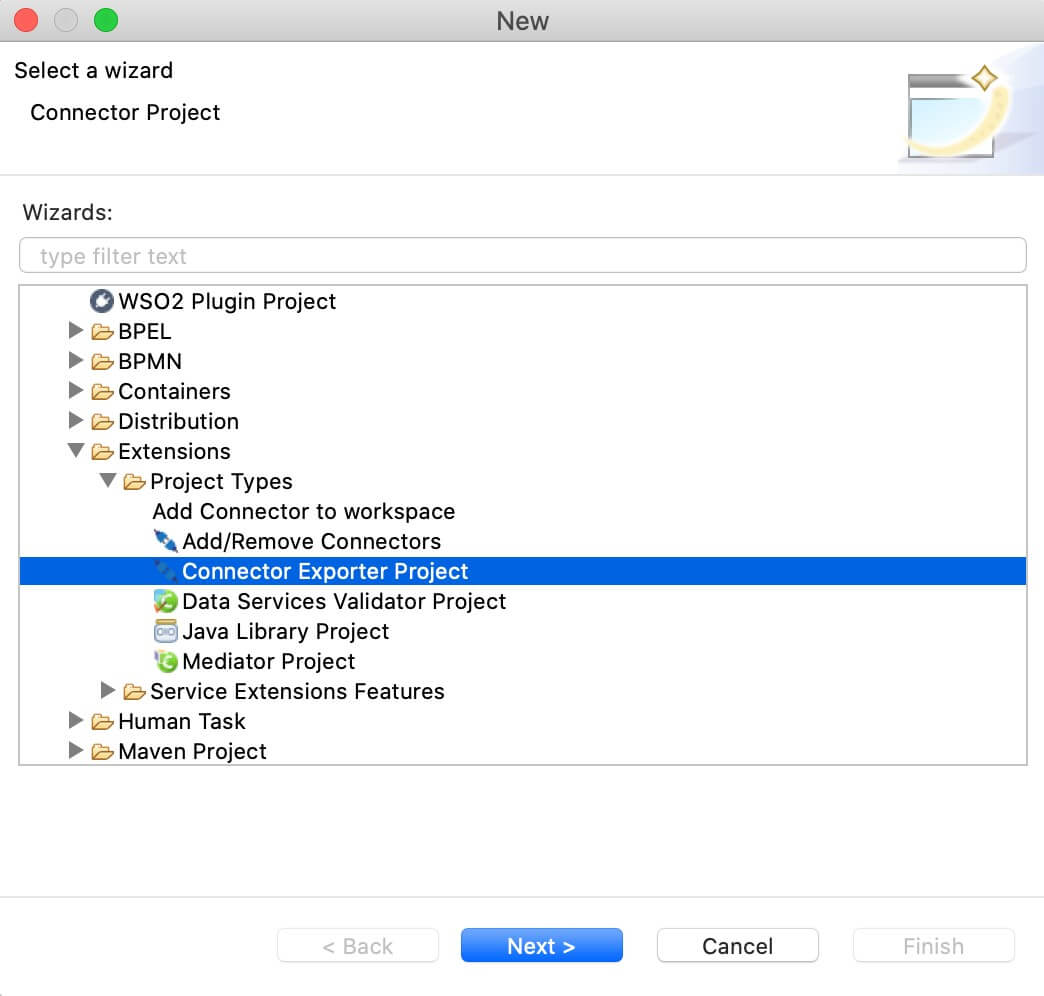

Navigate to File -> New -> Other -> WSO2 -> Extensions -> Project Types -> Connector Exporter Project.

-

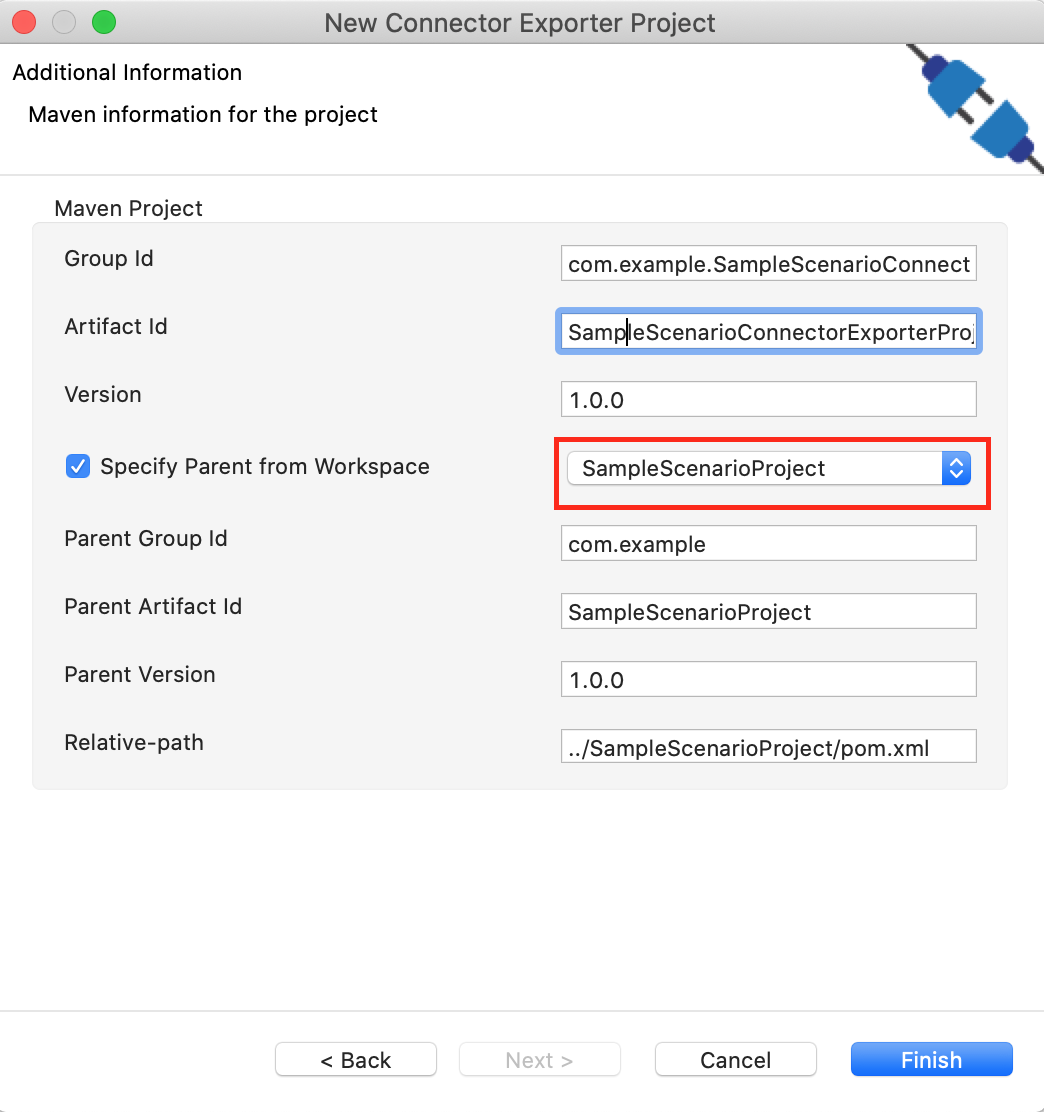

Enter a name for the Connector Exporter Project.

-

In the next screen select, Specify the parent from workspace and select the specific Integration Project you created from the dropdown.

-

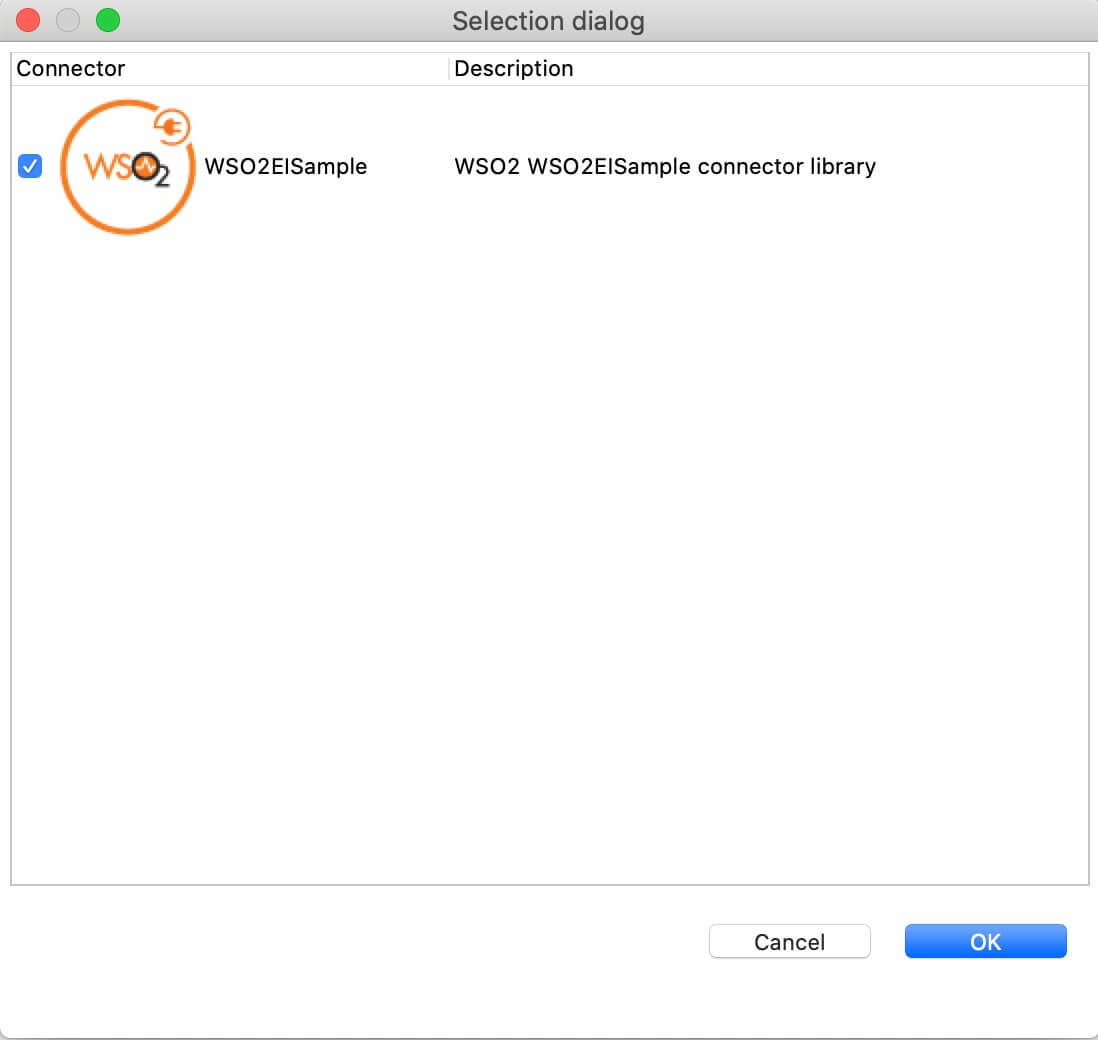

Now you need to add the Connector to Connector Exporter Project that you just created. Right-click the Connector Exporter Project and select, New -> Add Remove Connectors -> Add Connector -> Add from Workspace -> Connector

-

Once you are directed to the workspace, it displays all the connectors that exist in the workspace. You can select the relevant connector and click Ok.

Creating a Composite Application Project¶

To export the Integration Project as a CApp, a Composite Application Project needs to be created. Usually, when an Integration project is created, this project can be created as part of that project by Integration Studio. If not, you can specifically create it by navigating to File -> New -> Other -> WSO2 -> Distribution -> Composite Application Project.

Exporting the Composite Application Project¶

-

Right-click the Composite Application Project and click Export Composite Application Project.

-

Select an Export Destination where you want to save the .car file.

-

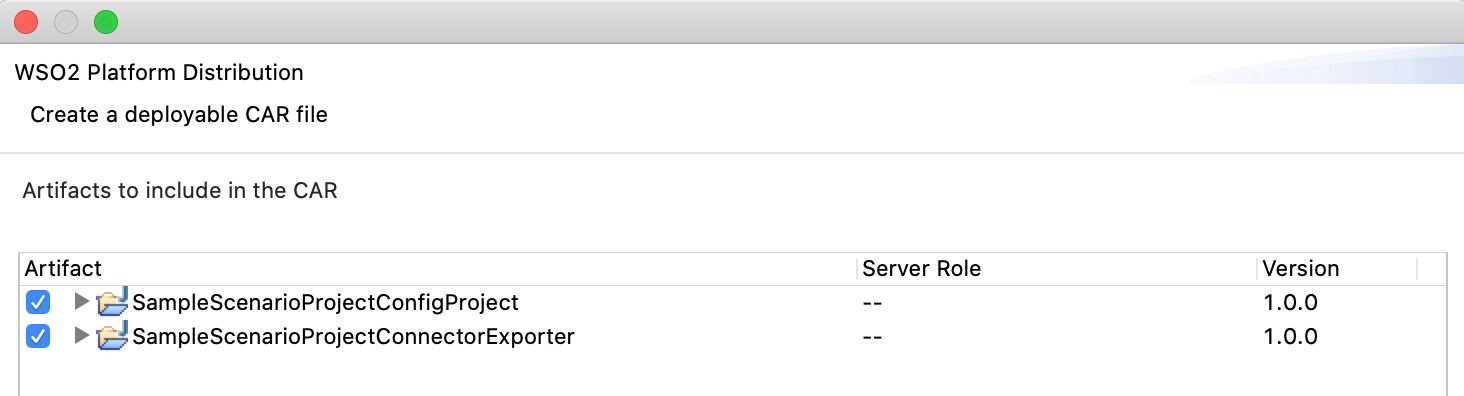

In the next Create a deployable CAR file screen, select both the created Integration Project and the Connector Exporter Project to save and click Finish. The CApp is created at the specified location provided at the previous step.

Deployment¶

Follow these steps to deploy the exported CApp in the Enterprise Integrator Runtime.

Deploying on Micro Integrator

You can copy the composite application to the <PRODUCT-HOME>/repository/deployment/server/carbonapps folder and start the server. Micro Integrator will be started and the composite application will be deployed.

You can further refer the application deployed through the CLI tool. See the instructions on managing integrations from the CLI.

Click here for instructions on deploying on WSO2 Enterprise Integrator 6

-

You can copy the composite application to the

<PRODUCT-HOME>/repository/deployment/server/carbonappsfolder and start the server. -

WSO2 EI server starts and you can login to the Management Console https://localhost:9443/carbon/ URL. Provide login credentials. The default credentials will be admin/admin.

-

You can see that the API is deployed under the API section.

Testing¶

Invoke the API (http://localhost:8290/publishMessages) with the following payload,

{

"test": {

"type": "record",

"name": "myrecord",

"fields": [

{

"name": "f1",

"type": ["string", "int"]

}

]

},

"value": {

"f1": "sampleValue"

},

"key": "sampleKey",

"topic": "myTopic"

}Expected Response:

Run the following command to verify the messages:

[confluent_home]/bin/kafka-avro-console-consumer.sh --topic myTopic --bootstrap-server localhost:9092 --property print.key=true --from-beginning{"f1":{"string":"sampleValue"}}<?xml version="1.0" encoding="UTF-8"?>

<api context="/publishMessages" name="KafkaTransport" xmlns="http://ws.apache.org/ns/synapse">

<resource methods="POST">

<inSequence>

<property name="valueSchema"

expression="json-eval($.test)"

scope="default"

type="STRING"/>

<property name="value"

expression="json-eval($.value)"

scope="default"

type="STRING"/>

<property name="key"

expression="json-eval($.key)"

scope="default"

type="STRING"/>

<property name="topic"

expression="json-eval($.topic)"

scope="default"

type="STRING"/>

<kafkaTransport.init>

<name>Sample_Kafka</name>

<bootstrapServers>localhost:9092</bootstrapServers>

<keySerializerClass>io.confluent.kafka.serializers.KafkaAvroSerializer</keySerializerClass>

<valueSerializerClass>io.confluent.kafka.serializers.KafkaAvroSerializer</valueSerializerClass>

<schemaRegistryUrl>http://localhost:8081</schemaRegistryUrl>

<maxPoolSize>100</maxPoolSize>

<basicAuthCredentialsSource>USER_INFO</basicAuthCredentialsSource>

<basicAuthUserInfo>admin:admin</basicAuthUserInfo>

</kafkaTransport.init>

<kafkaTransport.publishMessages>

<topic>{$ctx:topic}</topic>

<key>{$ctx:key}</key>

<value>{$ctx:value}</value>

<valueSchema>{$ctx:valueSchema}</valueSchema>

</kafkaTransport.publishMessages>

</inSequence>

<outSequence/>

<faultSequence/>

</resource>

</api><basicAuthCredentialsSource>URL</basicAuthCredentialsSource>Then, the schemaRegistryUrl parameter should be configured as shown below.

<schemaRegistryUrl>http://admin:admin@localhost:8081</schemaRegistryUrl>This demonstrates how the Kafka connector publishes Avro messages to Kafka brokers.

What's next¶

- You can deploy and run your project on Docker or Kubernetes. See the instructions in Running the Micro Integrator on Containers.

- To customize this example for your own scenario, see Kafka Connector Configuration documentation.