Tuning Performance¶

This section describes some recommended performance tuning configurations to optimize WSO2 API Manager. It assumes that you have set up the API Manager on Unix/Linux, which is recommended for a production deployment.

Warning

Performance tuning requires you to modify important system files, which affect all programs running on the server. WSO2 recommends that you familiarize yourself with these files using Unix/Linux documentation before editing them.

Info

The values that WSO2 discusses here are general recommendations. They might not be the optimal values for the specific hardware configurations in your environment. WSO2 recommends that you carry out load tests on your environment to tune the API Manager accordingly.

OS-level settings¶

When it comes to performance, the OS that the server runs plays an important role.

Info

If you are running MacOS Sierra and experiencing long startup times for WSO2 products, try mapping your Mac hostname to 127.0.0.1 and ::1 in the /etc/hosts file as described. For example, if your Macbook hostname is "john-mbpro. local", then add the mapping to the canonical 127.0.0.1 address in the /etc/hosts file, as shown in the example below.

127.0.0.1 localhost john-mbpro.localFollowing are the configurations that can be applied to optimize the OS-level performance:

-

To optimize network and OS performance, configure the following settings in the

/etc/sysctl.conffile of Linux. These settings specify a larger port range, a more effective TCP connection timeout value, and a number of other important parameters at the OS-level.Info

It is not recommended to use

net.ipv4.tcp_tw_recycle = 1when working with network address translation (NAT), such as if you are deploying products in EC2 or any other environment configured with NAT.net.ipv4.tcp_fin_timeout = 30 fs.file-max = 2097152 net.ipv4.tcp_tw_recycle = 1 net.ipv4.tcp_tw_reuse = 1 net.core.rmem_default = 524288 net.core.wmem_default = 524288 net.core.rmem_max = 67108864 net.core.wmem_max = 67108864 net.ipv4.tcp_rmem = 4096 87380 16777216 net.ipv4.tcp_wmem = 4096 65536 16777216 net.ipv4.ip_local_port_range = 1024 65535For more information on the above configurations, see sysctl.

-

To alter the number of allowed open files for system users, configure the following settings in the

/etc/security/limits.conffile of Linux (be sure to include the leading * character).* soft nofile 4096 * hard nofile 65535Optimal values for these parameters depend on the environment.

-

To alter the maximum number of processes your user is allowed to run at a given time, configure the following settings in the

/etc/security/limits.conffile of Linux (be sure to include the leading * character). Each Carbon server instance you run would require up to 1024 threads (with default thread pool configuration). Therefore, you need to increase the nproc value by 1024 per each Carbon server (both hard and soft).* soft nproc 20000 * hard nproc 20000

JVM-level settings¶

When an XML element has a large number of sub-elements and the system tries to process all the sub-elements, the system can become unstable due to a memory overhead. This is a security risk.

To avoid this issue, you can define a maximum level of entity substitutions that the XML parser allows in the system. You do this using the entity expansion limit as follows in the <API-M_HOME>/bin/wso2server.bat file (for Windows) or the <API-M_HOME>/bin/wso2server.sh file (for Linux/Solaris). The default entity expansion limit is 64000.

-DentityExpansionLimit=10000In a clustered environment, the entity expansion limit has no dependency on the number of worker nodes.

WSO2 Carbon platform-level settings¶

In multi-tenant mode, the WSO2 Carbon runtime limits the thread execution time. That is, if a thread is stuck or taking a long time to process, Carbon detects such threads, interrupts, and stops them. Note that Carbon prints the current stack trace before interrupting the thread. This mechanism is implemented as an Apache Tomcat valve. Therefore, it should be configured in the <PRODUCT_HOME>/repository/conf/tomcat/catalina-server.xml file as shown below.

<Valve className="org.wso2.carbon.tomcat.ext.valves.CarbonStuckThreadDetectionValve" threshold="600"/>- The

classNameis the Java class used for the implementation. Set it toorg.wso2.carbon.tomcat.ext.valves.CarbonStuckThreadDetectionValve. - The

thresholdgives the minimum duration in seconds after which a thread is considered stuck. The default value is 600 seconds.

API-M-level settings¶

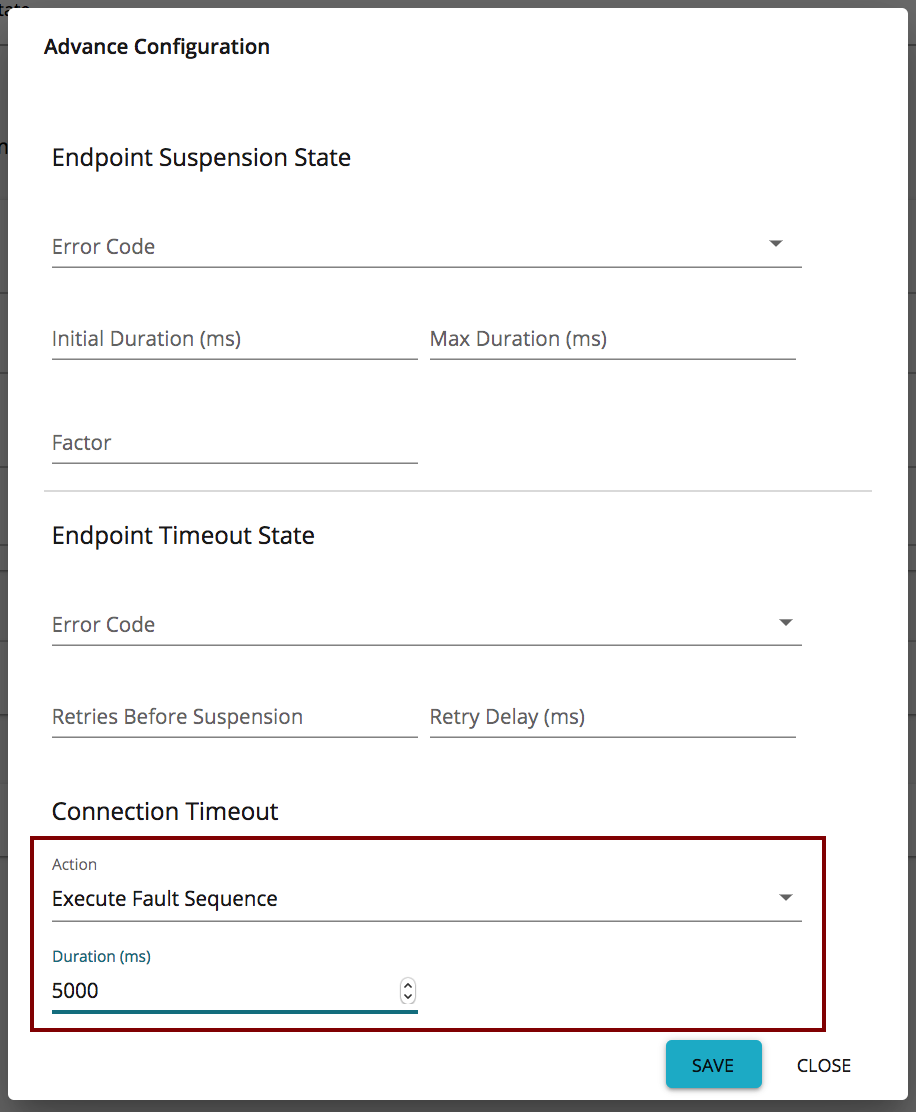

Timeout configurations for an API call¶

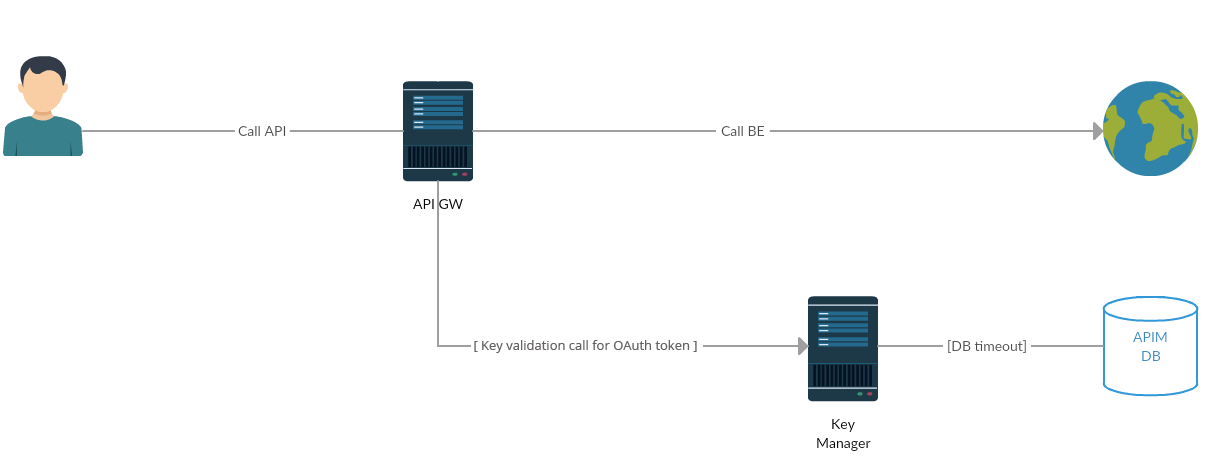

The following diagram shows the communication/network paths that occur when an API is called. The timeout configurations for each network call are explained below.

Info

The Gateway to Key Manager network call to validate the token only happens with the OAuth token. This network call does not happen for JSON Web Tokens (JWTs). JWT access tokens are the default token type for applications. As JWTs are self-contained access tokens, the Key Manager is not needed to validate the token, and the token is validated from the Gateway.

-

Client call API Gateway + API Gateway call Backend

For backend communication, the API Manager uses PassThrough transport. This is configured in the

<API-M_HOME>/repository/conf/deployment.tomlfile. For more information, see Configuring passthrough properties in the WSO2 Enterprise Integrator documentation. Add the following section to thedeployment.tomlfile to configure the Socket timeout value.[passthru_http] http.socket.timeout=180000Info

The default value for

http.socket.timeoutdiffers between WSO2 products. In WSO2 API-M, the default value forhttp.socket.timeoutis 180000ms.

General API-M-level recommendations¶

Some general API-M-level recommendations are listed below:

| Improvement Area | Performance Recommendations | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| API Gateway nodes |

|

||||||||||||

| PassThrough transport of API Gateway | Recommended values for the Property descriptions

Recommended values

Tip Make the number of threads equal to the number of processor cores. |

||||||||||||

| Timeout configurations | The API Gateway routes the requests from your client to an appropriate endpoint. The most common reason for your client getting a timeout is when the Gateway's timeout is larger than the client's timeout values. You can resolve this by either increasing the timeout on the client's side or by decreasing it on the API Gateway's side. Here are a few parameters, in addition to the timeout parameters discussed in the previous sections.

|

||||||||||||

| Key Manager nodes | Set the MySQL maximum connections: Set the open files limit to 200000 by editing the Set the following in the Info If you use WSO2 Identity Server (WSO2 IS) as the Key Manager, then the root location of the above path and the subsequent path needs to change from Set the following connection pool elements in the same file. Time values are defined in milliseconds. Note that you set the |

Registry indexing configurations¶

The registry indexing process, which indexes the APIs in the Registry, is only required to be run on the API Publisher and Developer Portal nodes. To disable the indexing process from running on the other nodes (Gateways and Key Managers), you need to add the following configuration section in the <API-M_HOME>/repository/conf/deployment.toml file.

[indexing]

enable = falseRate limit data and Analytics-related settings¶

This section describes the parameters you need to configure to tune the performance of API-M Analytics and rate-limiting when it is affected by high load, network traffic, etc. You need to tune these parameters based on the deployment environment.

Tuning data-agent parameters¶

The following parameters should be configured in the <API-M_HOME>/repository/conf/deployment.toml file. Note that there are two sub-sections related to Thrift and Binary.

[transport.thrift.agent]

:

[transport.binary.agent]

:| Parameter | Description | Default Value | Tuning Recommendation |

|---|---|---|---|

queue_size |

The number of messages that can be stored in WSO2 API-M at a given time before they are published to the Analytics Server. | 32768 | This value should be increased when the Analytics Server is busy due to a request overload or if there is high network traffic. This prevents the generation of the When the Analytics server is not very busy and when the network traffic is relatively low, the queue size can be reduced to avoid overconsumption of memory. Info The number specified for this parameter should be a power of 2. |

batch_size |

The WSO2 API-M statistical data sent to the Analytics Server to be published in the Analytics Dashboard are grouped into batches. This parameter specifies the number of requests to be included in a batch. | 200 | The batch size should be tuned in proportion to the volume of requests sent from WSO2 API-M to the Analytics Server.

|

core_pool_size |

The number of threads allocated to publish WSO2 API-M statistical data to the Analytics Server via Thrift at the time WSO2 API-M is started. This value increases when the throughput of statistics generated increases. However, the number of threads will not exceed the number specified for the max_pool_size parameter. |

1 | The number of available CPU cores should be taken into account when specifying this value. Increasing the core pool size may improve the throughput of statistical data published in the Analytics Dashboard, but latency will also be increased due to context switching. |

max_pool_size |

The maximum number of threads that should be allocated at any given time to publish WSO2 API-M statistical data to the Analytics Server. | 1 | The number of available CPU cores should be taken into account when specifying this value. Increasing the maximum core pool size may improve the throughput of statistical data published in the Analytics Dashboard, since more threads can be spawned to handle an increased number of events. However, latency will also increase since a higher number of threads would cause context switching to take place more frequently. |

max_transport_pool_size |

The maximum number of transport threads that should be allocated at any given time to publish WSO2 API-M statistical data to the Analytics Server. | 250 | This value must be increased when there is an increase in the throughput of events handled by WSO2 API-M Analytics. The value of the tcpMaxWorkerThreads parameter defined under databridge.config: in the <API-M_ANALYTICS_HOME>/conf/worker/deployement.yaml must change based on the value specified for this parameter and the number of data publishers publishing statistics. For example, when the value for this parameter is 250 and the number of data publishers is 7, the value for the tcpMaxWorkerThreads parameter must be 1750 (i.e., 7 * 250). This is because you need to ensure that there are enough receiver threads to handle the number of messages published by the data publishers. |

secure_max_transport_pool_size |

The maximum number of secure transport threads that should be allocated at any given time to publish WSO2 API-M statistical data to the Analytics Server. | 250 | This value must be increased when there is an increase in the throughput of events handled by WSO2 API-M Analytics. The value of the |